Overtone Battles the Pink-Slime Robots

Plus, sign-up to be one of the first people to see our new tool!

Overtone and its co-founders have been warning about the coming flood of AI-written articles for years, and to no one’s surprise … they are already here.

Last month the website rating company NewsGuard released a report that identified dozens of websites already using generative AI to get ad revenue, with destinations like celebritiesdeaths seemingly caring little about what was on the actual page as long as it got impressions.

The problem isn’t necessarily that these sites are spewing out misinformation, because most of the information itself is innocuous. They are an example of what is called “pink slime” content, where sites generate huge amounts of content without any real work or desire to inform. The new problem is that doing this is now incredibly easy and faster than before.

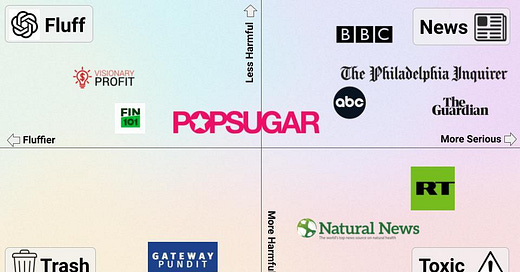

So how do you fight a problem like pink slime from polluting the internet, and generating profit from that pollution? One answer is the approach of going source by source, such as having journalists individually rate sites to create a list of trustworthy sites and non-trustworthy sites, or measuring the bias of individual sites.

However, that process can be lengthy and occasionally arbitrary, in addition to having trouble catching up to the speed at which new sites are created. If celebritiesdeaths.com is rated negatively, a potential polluter can just make a new site called famouspeopledeaths, and you are back to bringing in revenue.

Overtone, with the help of a grant from the EU’s MediaFutures initiative, has explored how we can help the advertising world support journalism and cut down on waste (in addition to being slime, the content is unlikely to help McDonald’s sell more hamburgers if they have an ad on celebritiesdeaths.com).

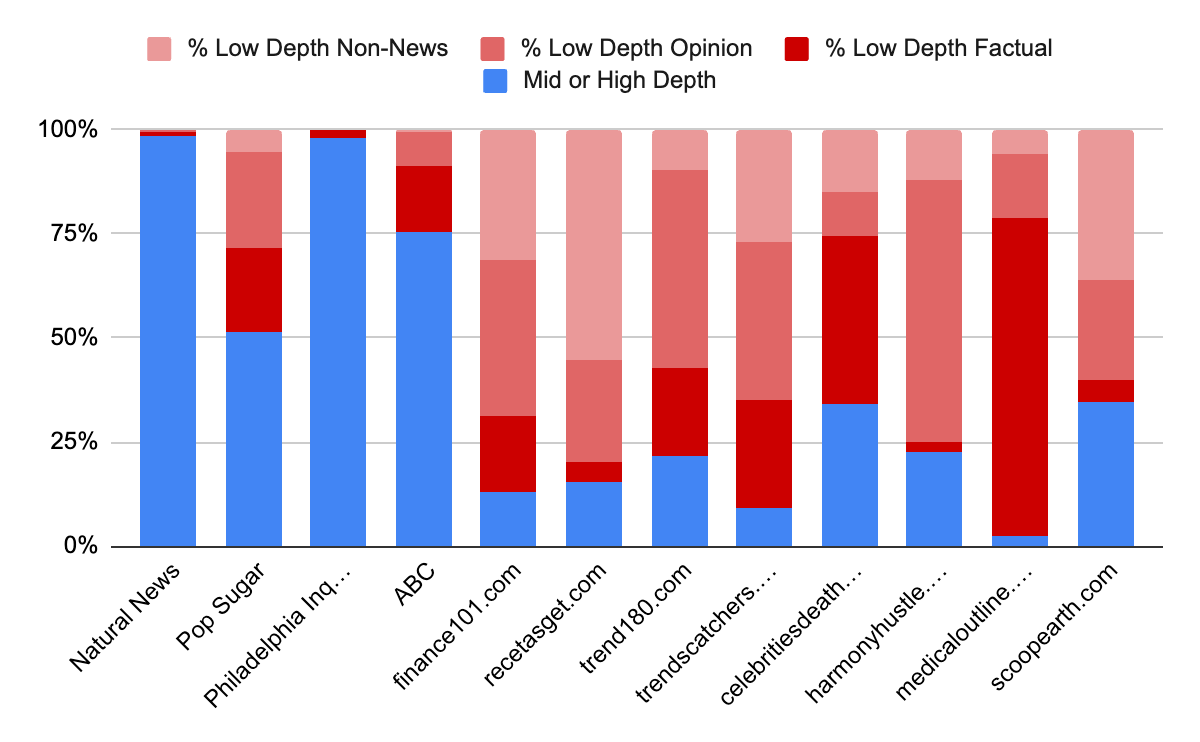

One method we have found to battle the pink slime robots (the Yoshimi method, inspired by the psychedelic rock group The Flaming Lips) is to look at the types of content that a given site is making, and compare that to “real” sites that want a relationship with an audience.

Overtone pulled in four websites from NewsGuard’s list, celebritiesdeaths, harmonyhustle, medicaloutline and scoopearth, and scored hundreds of articles from each of them with our models. We also pulled in hundreds of articles from four websites that are often on “made for advertising” lists, or lists that advertisers use to blacklist sites, even when they are not using generative AI. These were finance101.com, recetasget.com, trend180.com, and trendscatchers.co.uk.

You can see in the charts below that all of these sites that are just trying to garner impressions all have the same type of content, low-depth fluff. This is particularly apparent when compared to “real” sites that are trying to connect to audiences, from local news sites such as the Philadelphia Inquirer to national outlets to conspiracy websites such as Natural News, which promotes all sorts of toxicity, but is written by humans and does so in a less fluffy way.

Even snackable content websites like Popsugar have a significantly lower percentage of fluff than the made for advertising sites and the GPT-generated sites. By looking at the content itself, advertisers can choose to promote journalism rather than nonsense – and promote themselves in the process. They can also do it essentially instantly, the second a website exists. Looking at the content itself can be a better and more efficient way to look at sources.

But what about the bad stuff?

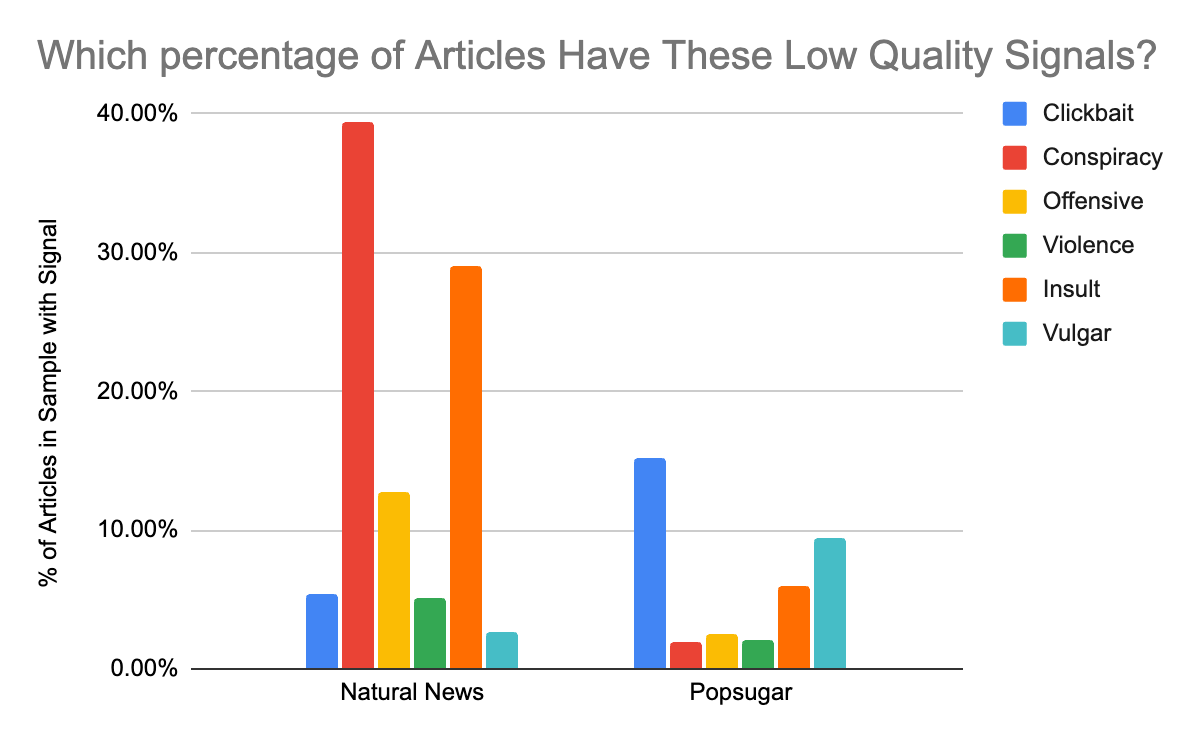

Being able to filter to get rid of the fluffy nonsense is one thing, but what about the bad stuff like Natural News? How do we make sure that sites like that aren’t amplified, even if they are new and not on a list of reviewed sites?

There are a lot of reasons why someone may choose not to like a site, from clickbait to conspiracy to calls for violence. Another of Overtone’s new models, also brought to life with the help of the EU MediaFutures initiative, is our Low Quality model, which looks and classifies potentially problematic content into different buckets. Sometimes I refer to it as the Anna Karenina model (All happy articles are alike, every unhappy article is unhappy in its own way).

This model looks for signals of clickbait, well-known conspiracy theories, calls for violence, offensive statements to a group, insults to an individual, swearing, general vulgarity, and pornography. It can separate negative signals such as clickbait on Popsugar, from conspiracy theories in Natural News articles.

Announcements:

Philip and Christopher are currently in Hamburg for the final event of the MediaFutures initiative. They will be showcasing the outcomes of our work, emphasising it’s positive impact on the internet while ensuring GDPR compliance.

Towards the end of the week, Reagan will be in Chicago to present some of our work to both publishers and advertisers at the MediaParty and lastly, Christopher will be attending EURACTIV’s Meet the Future of AI event in Brussels on June 29. If you are attending either event, please reach out to connect!

Additionally, Overtone has been nominated as a London Startup of the Year by HackerNoon! Make sure to head over to their site and vote for us!

As we teased up at the top of this newsletter, we will be soon opening up our new website, where individuals can sort through the internet with our tool, to the public. If you want to be one of the first ones with access, sign up below!